The IoT and the number of connected devices are growing exponentially globally. Storing the data from these devices and providing the computational power to process it centrally for AI might not be feasible. So it is necessary to bring AI closer to the periphery, and Edge AI technology does just that. We will analyze Edge AI in detail below, so read on to find out more!

Contents

- What is Edge AI?

- Edge AI Software

- Edge AI Hardware

- Cloud AI vs. Edge AI

- Edge AI vs. Fog Computing

- Edge AI Benefits

- Drawbacks of Edge AI

- Edge AI Industrial Examples and Use Cases

- The Future of Edge AI

- Fast Growth

- Improved Privacy and Security

- Better Orchestration

- Better Development and Operations of AI Models

- Wrap Up

What is Edge AI?

Edge AI is a combination of two terms: edge computing and artificial intelligence.

AI involves simulating human intelligence using machines and programming. And it usually requires machine learning or deep learning to manage data and train models before deploying the AI for inferencing.

An infographic depicting the AI concept

On the other hand, edge computing is a distributed computing paradigm. It deploys the storage and computing resources close to the data source or the network edge, hence the name "edge."

An infographic depicting edge computing

So Edge AI handles artificial intelligence applications on the edge or end devices. These edge devices must have enough computational power to manage data creation and processing without streaming and storing data in the cloud.

Most people conceptualize edge computing using physical things like intelligent 5G cell towers & routers, edge servers, and network gateways. But this concept fails to accommodate the value Edge AI brings to consumer devices like smartphones, robots, and self-driving cars.

Edge AI Software

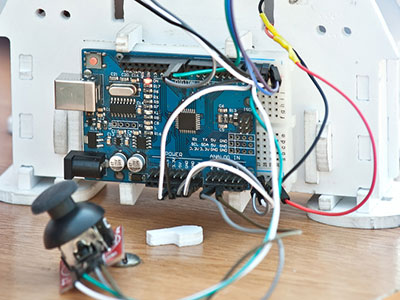

Edge AI software consists of multiple machine learning algorithms running on physical hardware edge computing devices.

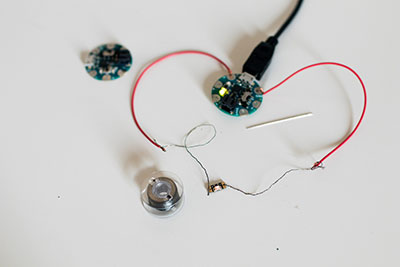

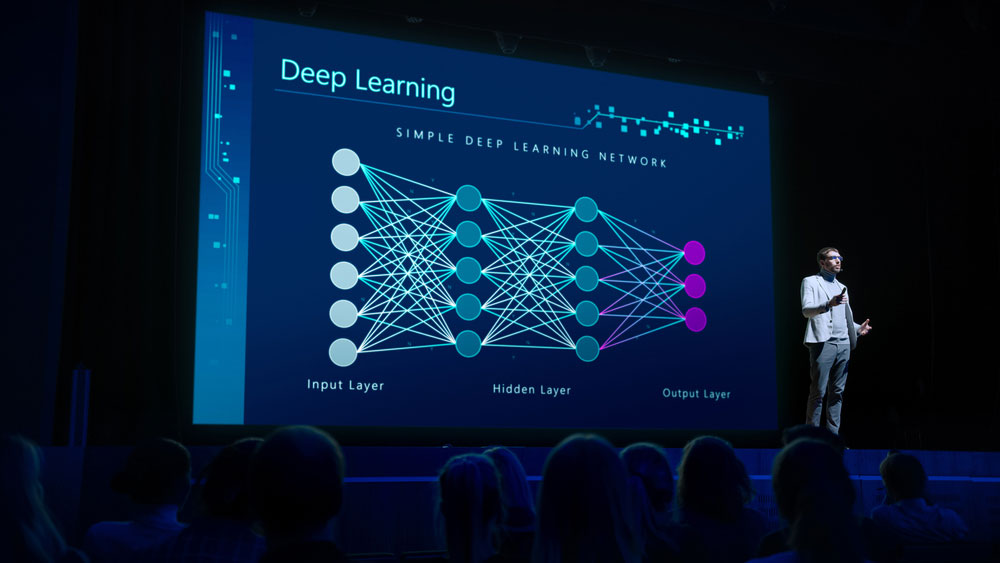

These algorithms include deep learning models that process data on the local device. Deep learning models are subsets of machine learning models that use neural networks to mimic the brain's learning process.

A computer scientist doing a presentation on deep learning using a neural network

This operating mechanism allows the end users to get data in real-time because it does not need an internet connection or other systems. Each edge device runs independently.

Edge AI Hardware

The machine learning models can run on several hardware platforms, from a small microcontroller unit (MCU) to complex neural processing devices.

IoT devices are also vital Edge AI hardware used in modern applications. Some edge IoT devices use embedded algorithms to monitor device behavior for data collection and processing. The edge computing device then makes decisions and corrects its mistakes to make better predictions in the future. And all this processing occurs without human involvement.

Cloud AI vs. Edge AI

The difference is simple. Edge AI involves deploying computing and storage resources closer to the users (network edge). On the other hand, cloud computing centralizes these resources into one or a few cloud data centers.

Cloud computing allows organizations to train large models rapidly but has challenges for different inferences. For instance, providing predictions to respond to user queries and actions using cloud AI might have delay challenges.

A data center with multiple servers

But Edge AI applications run on edge computing devices and don't need an internet connection. So the model has no data latency issues, meaning faster or real-time processing/inferencing.

If you look at how the two technologies evolved, it all started with desktop computers, embedded systems, mainframes, and cell phones. Cloud or edge artificial intelligence did not exist then.

Developers made applications for these devices mainly using the waterfall method, which took a long time. So they tried to combine as many functions and tests into annual updates.

Cloud computing came into play and allowed agile development. Also, it highlighted various ways of automating cloud data centers. So it became possible to update cloud applications several times daily and develop applications in tinier chunks.

Artificial intelligence applications in network edge devices extend the modular development capability beyond the centralized cloud. So most manufacturers and developers are moving cloud capabilities to the network edge to enhance AI performance.

End devices in an IoT concept

Special Offer: Get $200 off your order!

Email [email protected] to get started!

Edge AI vs. Fog Computing

Cisco introduced the term fog computing, which closely relates to edge computing. It involves extending the cloud server closer to the edge IoT devices, almost like a remote server. The setup improves security and reduces latency by handling computations near the edge devices.

With edge computing, processing occurs on the end devices that house the sensors or gateway devices close to the sensors. But with fog computing, data processing is further from the network edge (but not as far away as cloud computing) on devices connected to the LAN.

Edge AI Benefits

Edge computing has the following benefits compared to a centralized cloud environment.

- Low latency: Transferring data back and forth to the server in the cloud AI setup takes time. Edge computers process the data in remote locations, eliminating the need to communicate with centralized data centers.

- High speeds: Processing data locally enhances computing speeds significantly more than with centralized computing.

A smart factory combining big data, IoT, deep learning, 5G, and more

- Real-time analytics: Edge intelligence brings high-performance computing performance closer to the user where there are IoT devices and sensors.

- Low bandwidth requirement and cost: Handling processing using edge technology eliminates the need for cloud storage and internet bandwidth to transmit video, voice, and high-fidelity sensor data.

- Better data security: Data breaches usually occur during transmission. Edge AI handles the data storage and processing on edge devices. So the chances of data breaches are lower due to reduced data transmission. This security is a big plus to businesses handling sensitive data using AI.

- Better reliability: The combination of data security, high speed, and low latency creates a reliable system.

- Scalability: Cloud computing makes it easy to train and scale large models. Edge AI builds on this advantage to extend modular development capability beyond the cloud. This model makes the edge technology easily scalable.

Self-driving cars of the future

- Reduced power consumption: Centralized data centers gobble up a lot of energy because the servers must be on whether the edge devices are online or offline. But with Edge AI, the cloud servers deployment is minimal or none.

- Low cost: Besides being energy efficient, the minimal network infrastructure required to run edge computers makes the platform cheaper to install and operate.

Drawbacks of Edge AI

Edge AI is not perfect. Some of its flaws include the following.

- Lost data: Most edge computers discard the data they deem irrelevant to free up the limited space. If it is relevant, then you have lost data. So you must program them carefully to avoid data loss.

- Low compute power: Edge devices can only handle inferences on relatively small models than centralized data centers. The technology cannot match cloud capabilities in creating and serving large models.

- Machine variations: There is a wide range of edge computers. And relying on these different technologies can lead to more failures.

- Security: Cloud-based security is evolving and getting more robust. And most AI cloud providers invest heavily in security. So your local network might be more vulnerable than cloud transmission if you don't implement security protocols.

Edge AI Industrial Examples and Use Cases

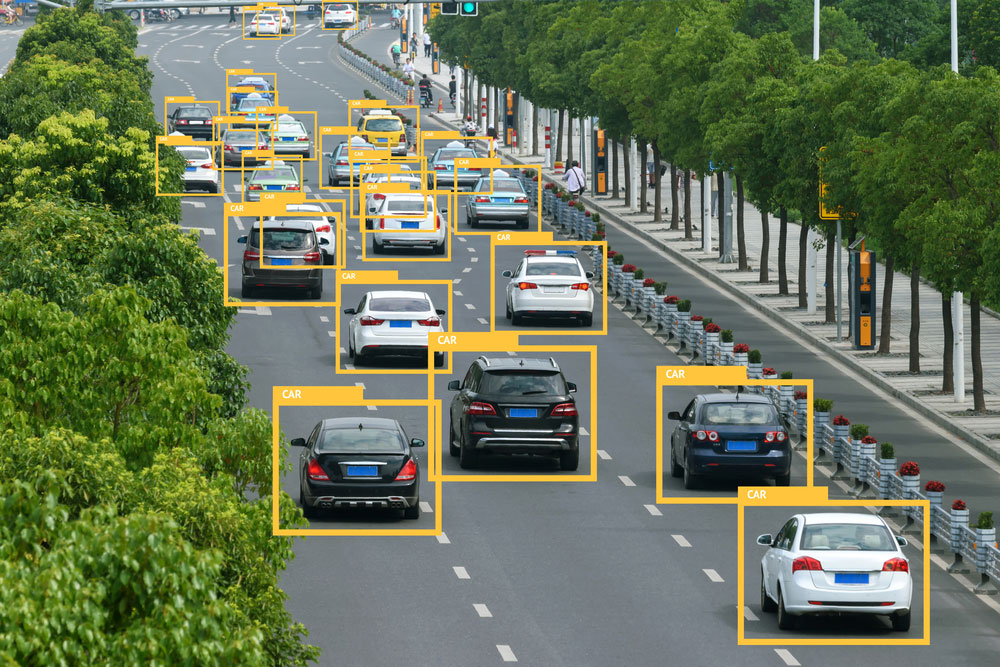

- Smart AI vision (computer vision applications like video analytics)

- Smart energy (ideal for running wind farms, solar farms, smart grids, etc.)

- AI healthcare (wearable devices for health monitoring, remote surgery, patient monitoring, etc.)

- Entertainment (virtual reality, augmented reality, etc.)

- Intelligent transportation systems (traffic congestion information sharing, traffic monitoring for optimizing traffic light timing, etc.)

Machine learning analytics for identifying vehicles in traffic

- Autonomous driving

- Intelligent factories (factory robots and the like)

- Fault detection in factory production lines

- Autonomous robots and robotic arms

- Product counting at checkouts

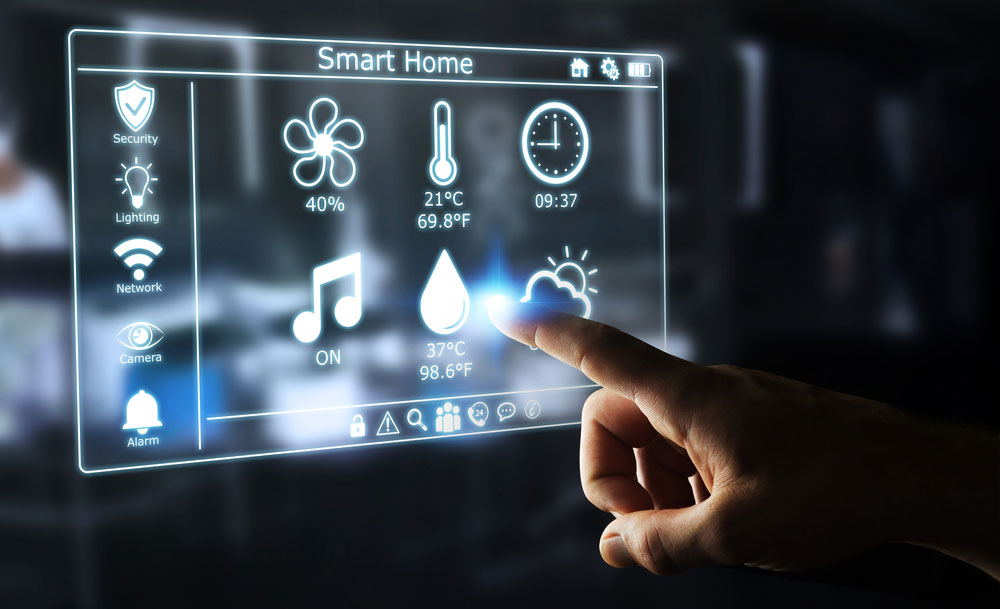

- Smart homes

The Future of Edge AI

Fast Growth

Edge AI is still emerging but growing fast. The Linux Foundation forecasts the power footprint of edge computers will increase from 1GW in 2019 to 40GW in 2028. Current edge devices include intelligent appliances, wearables, and smartphones. But industry & supply-chain automation, smart cities, and smart healthcare will grow the technology further in the future.

A smart home digital interface

Improved Privacy and Security

Emerging technologies like federated learning are likely to enhance the security and privacy of Edge AI. This learning involves the edge device making local AI training updates. The edge device then pushes these models to the cloud instead of the raw data, which minimizes privacy concerns.

Better Orchestration

Most edge devices do local inferencing on data directly seen by the device. But future devices might run local inferencing using data collected from adjacent sensors.

A soil sensor is used for checking the soil quality, managing water, and monitoring weather.

Better Development and Operations of AI Models

The DevOps for AI models is at much earlier stages than that of other general applications. So data management is more challenging when handling AI workflows involving data orchestration, modeling, and deployment. Over time, these tools will improve, making it easier to scale Edge AI applications and explore emerging AI architectures.

Wrap Up

In conclusion, Edge AI builds upon centralized cloud computing by distributing artificial intelligence to the network edge for better efficiency, reliability, and security. And it is a vital component of emerging technologies like 5G and IoT. So it will grow significantly in the future. That's it for now. If you have any questions or sentiments, drop a comment, and we'll be in touch.

Special Offer: Get $200 off your order!

Email [email protected] to get started!